In this post we are going to share with you the understanding and use of the principles of parallel computing in modern information systems. To get a deeper understanding of this, we would like to present arguments in favor of studying and practical application of the concepts of parallel and distributed computing in the development of modern information systems.

Parallel computing

First, a little history. In 1965, Gordon Moore, one of the founders of Intel, discovered a pattern: the appearance of new microcircuit models was observed about a year after the predecessors, and the number of transistors in them increased approximately twice each time. It turns out that the number of transistors placed on the chip of the integrated circuit doubled every 24 months. This observation became known as Moore’s Law. The founder of Intel predicted that the number of elements in the chip would grow from 2 ^ 6 (about 60) in 1965 to 2 ^ 16 (65 thousand) by 1975.

Now, in order to be able to use in practice this additional computing power that Moore’s law predicted, it has become necessary to use parallel computing. For several decades, processor manufacturers have steadily increased their clock speed and parallelism at the instruction level, so that on new processors, old single-threaded applications run faster without any changes in the program code.

Since about the mid-2000s, processor manufacturers have begun to give preference to a multi-core architecture, but to get the full benefit of the increased CPU performance, programs must be rewritten in an appropriate manner. Here a problem arises, because according to the Amdal law, not every algorithm lends itself to parallelization, thus determining the fundamental limit of the efficiency of solving a computational problem on supercomputers.

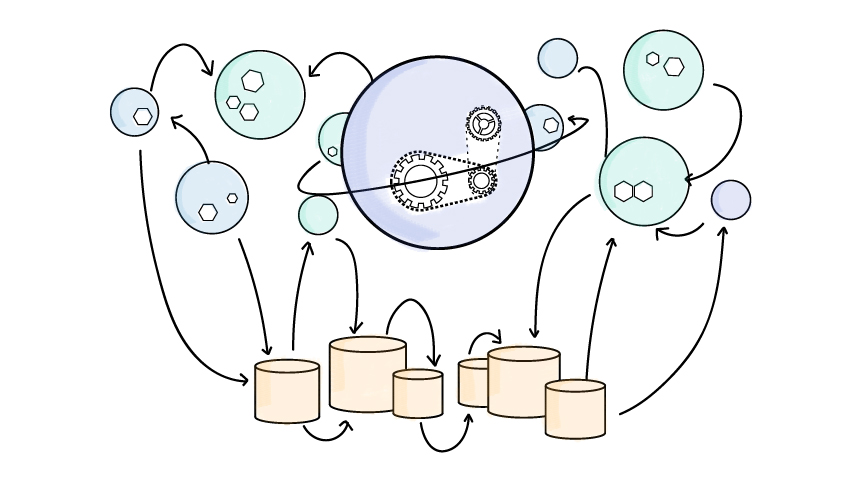

To overcome this limit, distributed computing approaches are used. This is a way to solve time-consuming computational problems using multiple computers, which are most often combined into a parallel computing system. Sequential computations in distributed information systems are performed taking into account the simultaneous solution of many computational problems. A feature of distributed multiprocessor computing systems, in contrast to local supercomputers, is the possibility of unlimited increase in performance due to scaling.

Approximately since mid-2005, computers are massively bundled with multi-core processors, which allows parallel computations. And modern network technologies allow to combine hundreds and thousands of computers. What led to the emergence of the so-called “cloud computing.”

Application of parallel computing

Current information systems, such as e-commerce systems, are in dire need of providing quality services to their customers. Companies are competing, inventing all the new services and information products. Services must be designed for high load and high resiliency, as the users of the services are not one office, not one country, but the whole world.

At the same time, it is important to preserve the economic feasibility of projects and not to spend unnecessary funds on expensive server hardware, if it will run old software that uses only a fraction of the computing power.

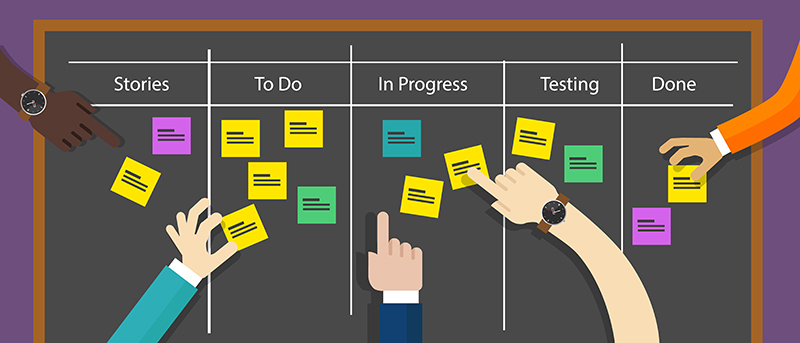

Application developers faced a new problem – the need to rewrite information systems to meet the requirements of modern business and the need to better utilize server resources to reduce the total cost of ownership. The tasks that need to be addressed by modern information systems are diverse.

Starting from machine learning and big data analytics, to ensuring the stable operation of the existing basic system functionality during peak periods. For example, here are massive sales in the online store. All these tasks can be solved using a combination of parallel and distributed computing, for example by implementing a micro-service architecture.

Quality control of services

To measure the actual quality of services for a client, the concept of a service-level agreement (SLA) is used, that is, some statistical metrics of system performance.

For example, developers can set themselves the task to ensure that 95% of all user requests are serviced with a response time not exceeding 200 ms. By the way, it is quite real non-functional requirements, because users do not like to wait.

To assess user satisfaction with the service, you can use the Apdex indicator, which reflects the ratio of successful (satisfied) responses to unsatisfactory (unsatisfactory). For example, our threshold SLA = 1.2 seconds, then at 95% of the query response time <= 1.2 seconds, the result will be successful. In the case of a large number of requests more than 1.2 seconds, but less than 4T (4.8 seconds), the result is considered satisfactory, and when a large number of requests exceed 4T, those> 4.8 seconds, the result is considered a failure.

Findings

As a result, we would like to say that the development of microservices actually involves the understanding and practical application of Distributed and Parallel Computing.

Of course, you will have to sacrifice some things:

- Ease of development and maintenance – the efforts of developers to implement distributed modules;

- When working with databases, strict consistency (ACID) may be demanded and require other approaches;

- The computational power is spent on network communications and serialization;

- We will spend time implementing the DevOps practices for more sophisticated monitoring and deployment.

In return, we get, in our opinion, much more:

- the ability to reuse entire modules ready for operation, and, as a result, the quick launch of products on the market;

- high system scalability, which means a greater number of customers without losing the SLA;

- Using eventual consistency, based on the CAP theorem, we are able to manage huge amounts of data, again, without losing the SLA;

- the ability to record any change in the state of the system for further analysis and machine learning.

About The Author: Yotec Team

More posts by Yotec Team